Nvidia unveils new AI model capable of modifying voices

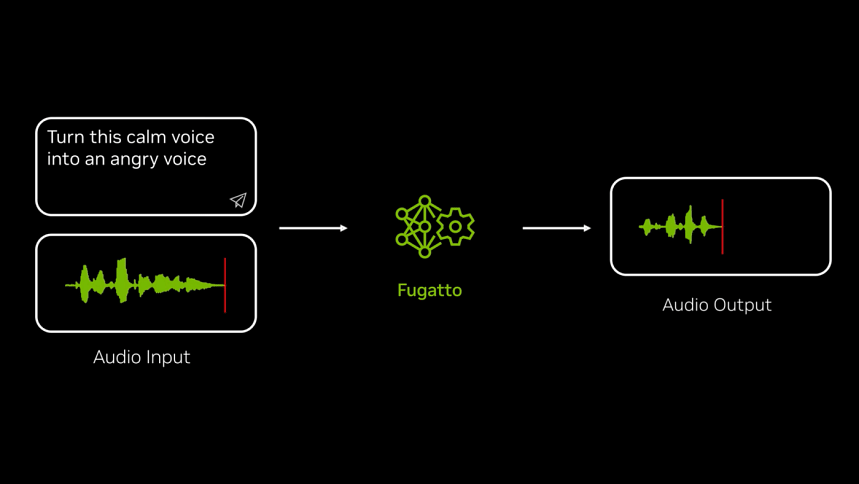

Nvidia has introduced a new generative AI model, Fugatto, capable of generating and transforming audio, music, and voices based on text and audio prompts. The technology targets industries such as music production, film, and video games, offering advanced tools for creating and modifying audio content, as per an official blog post by Nvidia.

Fugatto, short for Foundational Generative Audio Transformer Opus 1, stands out from existing AI models due to its ability to process and alter existing audio in addition to generating entirely new sounds, says Nvidia. For example, it can transform a piano recording into a vocal rendition, alter a speaker's accent or emotional tone, or generate entirely novel sounds like a trumpet mimicking a dog's bark.

The model combines capabilities such as temporal interpolation, which allows the creation of dynamic audio that evolves over time, and ComposableART, a feature enabling users to merge multiple instructions, such as generating a sad French-accented voice. Users can control the degree of changes, such as the intensity of an accent or emotion, adds Nvidia in their blog post.

Trained on open-source data and leveraging Nvidia's DGX systems with 32 H100 Tensor Core GPUs, Fugatto operates with 2.5 billion parameters.

Nvidia has not announced plans to publicly release the model, citing concerns about misuse, including generating misinformation or copyrighted material.

While Nvidia emphasised the model's potential to reshape audio creation, it acknowledged risks and the importance of cautious implementation. Other tech companies, including OpenAI and Meta, have also hesitated to release similar generative AI models, as stated in an article by Reuters on the matter.

For all latest news, follow The Daily Star's Google News channel.

For all latest news, follow The Daily Star's Google News channel.

Comments