Generative AI uses 30 times more energy than search engines: research

Sasha Luccioni, a prominent researcher recognised by Time magazine as one of the top 100 influential figures in AI, has recently raised concerns about the massive energy consumption of AI, especially generative models like ChatGPT and Midjourney. According to her research, these types of AI use much more energy than traditional search engines—up to 30 times more—because they don't just pull up information; they create new content based on user input.

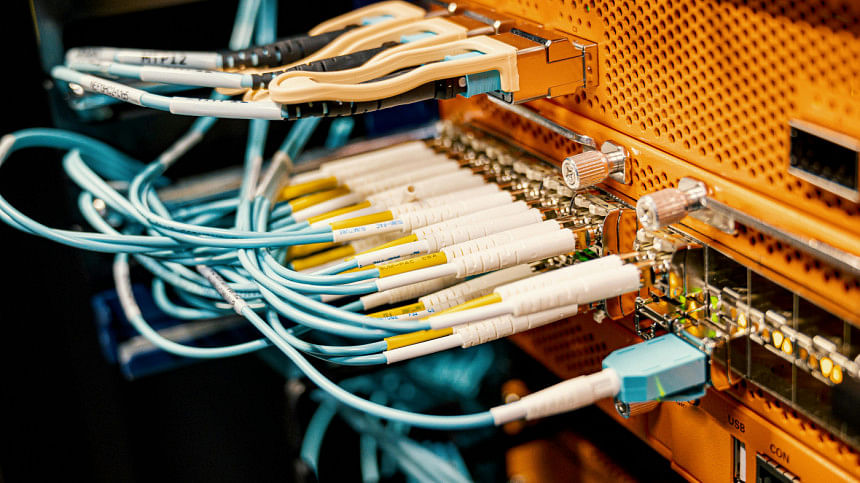

Speaking at the ALL IN Artificial Intelligence conference in Montreal, Luccioni explained that training AI models on vast amounts of data requires powerful servers that consume a lot of electricity. Once these models are up and running, responding to each user request continues to use a lot of energy.

According to the International Energy Agency, AI and the cryptocurrency sector together used around 460 terawatt hours of electricity in 2022, which is about 2% of all the electricity produced worldwide. That's a huge amount, and it's only expected to grow as AI becomes more common, as stated in a recent report on the matter by AFP.

To help reduce AI's environmental impact, Luccioni helped create a tool called CodeCarbon in 2020, which developers can use to measure the carbon footprint of their code. Over a million people have used it so far, states the AFP report.

Luccioni is currently working on a system to rate AI models based on how energy-efficient they are, similar to energy labels on appliances like refrigerators. The idea is to help people make better choices by showing which AI tools are more energy-efficient and which ones use more power.

Despite big promises from companies like Google and Microsoft to become carbon-neutral by the end of the decade, their greenhouse gas emissions have actually gone up recently, largely due to the increased use of AI. Google's emissions jumped 48% since 2019, and Microsoft's rose 29% since 2020, according to the AFP report. Luccioni believes these companies need to be more open about how their AI models are trained and how much energy they use so that governments can step in with rules to manage the environmental impact.

Luccioni is not against AI, but she urges companies and individuals to use it wisely. She advocates for what she calls "energy sobriety", meaning we should choose AI tools carefully and use them only when necessary. For example, her research found that generating just one high-definition image with AI uses as much energy as fully recharging a phone. With AI being integrated into more parts of daily life, from chatbots to online searches, she believes it's important to think about how much energy is being used and at what cost.

For all latest news, follow The Daily Star's Google News channel.

For all latest news, follow The Daily Star's Google News channel.

Comments