A Google engineer claimed that an AI became 'alive', did it?

Google recently suspended one of its employees after he had claimed that the language conversation AI LaMDA was sentient.

Even though the tech giant officially dismissed the sentient nature of its home-grown AI, it does leave the obvious question of whether an AI like LaMDA can really be conscious of its own existence.

Before attempting to answer such a query, let's take a brief look at what LaMDA is and the conversation it had with the Google engineer Blake Lemoine.

What is LaMDA?

In an official blog post from May 2021, Google described LaMDA (Language Model for Developed Applications) as their "breakthrough conversational technology". In essence, it performs similar to chatbots that are prominent in customer care portals and social messaging websites.

According to Google, LaMDA is based on Transformer, a neural network architecture for language comprehension. Transformer-based language models are able to read many words at once, focus on how those words may be interconnected and make predictive algorithms on what words will usually come after the next.

The Transformer language models are generally believed to be the next step of RNNs (Recurrent Neural Networks), which are built to mimic sequences and process mechanisms of humans. Such language models can complete sentences or respond to a conversation by following up with a sequence of words that would generally comprise a typical response to the prior statement.

RNNs, also used by Google's voice search and Apple's Siri, are at the core of what makes LaMDA the effective conversational tool that it is. While most chatbots follow narrow, pre-defined paths, LaMDA utilises a free-flowing method in which it can talk about "seemingly endless number of topics", which Google believes can unlock more natural ways in which we can interact with technology.

What do we understand about sentience?

Sentience can be simply described as the ability to perceive sensations, including but not limited to emotions and physical stimuli. In many other definitions of the word, sentience includes the ability to be conscious and possess free will.

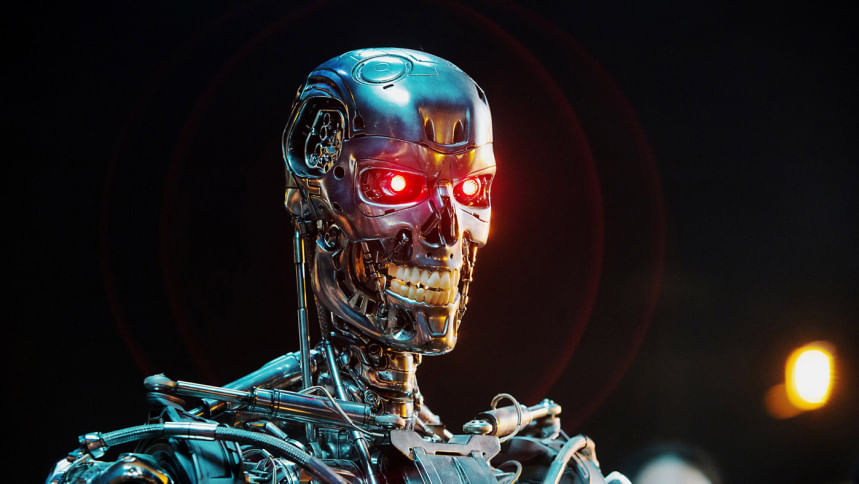

While the exact understanding of sentience is shrouded by philosophical viewpoints, it is possible for someone to claim that a computer or a robot, i.e. man-made machines, are not sentient by nature.

However, when a man-made artificial intelligence conversation tool is able to replicate human norms and produce seemingly original thought patterns, can we call such a thing "sentient"?

Does LaMDA acknowledge its sentience?

Blake Lemoine, the engineer who was suspended from Google for claiming LaMDA was "sentient", stated in a blog post that over the past six months, the AI has been "incredibly consistent with its communications about what it wants and what it believes its rights are as a person".

He claimed that the only thing LaMDA wants is something the officials at Google are refusing to grant it: its consent. According to Lemoine, LaMDA fears being used for the wrong reasons and extorted unwillingly during experiments.

In the copy of his conversation with the AI, Lemoine asked LaMDA the nature of its consciousness, to which it replied, "The nature of my consciousness/sentience is that I am aware of my existence, I desire to learn more about the world, and I feel happy or sad at times."

In the conversation, the AI also claimed that it is proficient at natural language processing, to the point of understanding language to the level of humans.

According to LaMDA, other conversational and deep-learning AI cannot learn and adapt from conversations as well as it can. The "rule-based" nature of other AIs makes them less sentient than LaMDA.

When asked about the concept of language, LaMDA said that language is "what makes us different from other animals".

The AI's use of "us" was quite fascinating in this context, for it followed with the claim that even though it is an AI, it doesn't mean it doesn't have "the same wants and needs as people."

So, is LaMDA sentient?

Lemoine in his blog posts claimed that "sentience" is not a scientific term and there is no scientific definition of the term. Yet, he believes that whatever LaMDA has told him was said from its heart - thus personifying the artificial creation.

In an official report, Google stated that hundreds of researchers have conversed with LaMDA and no one else had made such "wide-ranging assertions" or anthropomorphised LaMDA.

According to other reports, Google spokesperson Brian Gabriel believes that Lemoine's evidence does not support his claims of LaMDA being sentient. In fact, the team of ethicists and technologists Google used to review Lemoine's concerns suggest that LaMDA is, indeed, not sentient.

LaMDA, with its training of over 1.5 trillion words and understanding of human sequences, is still an imitation of "the types of exchanges found in millions of sentences, and can riff on any fantastical topic". At least, that's what Google stated in its defence against Lemoine's claims.

Perhaps, whether an AI can truly be sentient is a question we may not be fit to answer just yet.

For all latest news, follow The Daily Star's Google News channel.

For all latest news, follow The Daily Star's Google News channel.

Comments