Google is going all AI: Biggest announcements from I/O 2024

Google's annual I/O developer conference 2024 hosted on May 14 has unveiled a series of transformative upgrades set to reshape the digital landscape. Here are the key highlights from Google I/O 2024.

The Gemini era

During the event, Google revisited its vision for Gemini, a model designed to process diverse forms of data like text, images, video, and code.

Over the past year, Google has introduced Gemini models, including the Gemini 1.5 Pro, which has the capacity for processing extensive contexts which is a critical breakthrough enabling developers to harness AI's full potential.

In the event, Sundar Pichai, CEO of Google and Alphabet said, "Today more than 1.5 million developers use Gemini models across our tools. You're using it to debug code, get new insights, and build the next generation of AI applications."

The impact of Gemini extends beyond the developer community, with all of Google's 2-billion user products now incorporating Gemini's advanced capabilities. The introduction of 'Gemini Advanced' has attracted over 1 million users within three months, underscoring the growing demand for AI-driven functionalities.

Google Photos gets 'Ask Photos'

In the domain of personal content management, Google Photos has undergone a transformative upgrade by Gemini. The new 'Ask Photos' feature allows users to retrieve specific memories, from recalling licence plate numbers to tracking personal milestones. 'Ask Photos' represents a leap forward in content accessibility, employing multimodal AI to provide personalised summaries and insights.

Expanding long context capabilities

With Gemini 1.5 Pro, Google is now offering a long context window of 2 million tokens which is available for developers in private preview. Meanwhile, Gemini 1.5 Pro with 1 million content is directly available for consumers in Gemini Advanced across 35 languages.

Gemini 1.5 Pro in workspace

The integration of Gemini's multimodal and long context capabilities into Google Workspace marks a strategic move towards enhancing productivity tools. For instance, Gemini can summarise email attachments like PDFs intelligently, and give highlights of a meeting held on Google Meet, empowering users with contextual insights and information.

AI transformations in google search

Gemini's influence is most pronounced in Google Search, where it has facilitated a shift in user interaction. The enhanced Search Generative Experience now accommodates complex queries, even supporting searches via images. Building on successful testing outside of Labs, Google is rolling out 'AI Overviews' to enhance Search experiences for users in the U.S., with plans for a broader global rollout.

Circle to Search and LearnLM for learning with AI

The AI-driven Circle to Search feature, now expands its capabilities to solve more intricate problems spanning physics and mathematical puzzles. This enhancement aims to facilitate a more intuitive interaction with Google Search, allowing users to perform actions like circling, highlighting, scribbling, or tapping directly on their devices. Moreover, this feature is particularly useful for assisting students with homework tasks directly from supported Android phones and tablets. With the capabilities of LearnLM, this feature will not only solve problems but also give contextual answers. Not just the answers of maths problems but every step of solving those problems will be shown.

Introducing audio outputs in NotebookLM

Expanding beyond text outputs, NotebookLM now incorporates audio outputs. Utilising Gemini 1.5 Pro to transform source materials into personalised, interactive audio conversations.

Trillium TPUs

Google has announced Trillium, the 6th generation of Tensor Processing Units (TPUs) which deliver 4.7 times improvement in compute performance per chip compared to its predecessor, TPU v5e. It will be available to Cloud customers in late 2024.

Enhanced multimodal capabilities with Gemini Nano

With Gemini Nano, Google will deliver fast, personalised experiences while prioritising the privacy of user data. Later this year, beginning with Pixel devices, Google will introduce the latest model of Gemini Nano featuring Multimodality. This means that the phone will not only process text inputs but also comprehend contextual information such as visual cues, sounds, and spoken language.

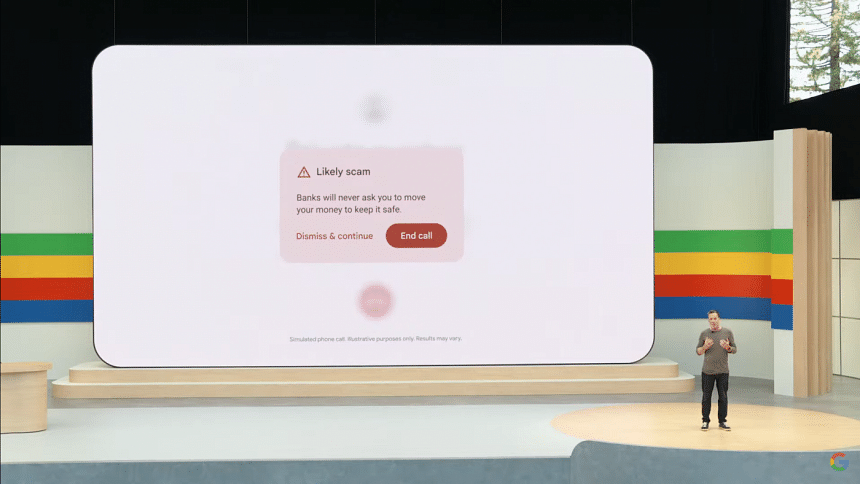

Scam alert

Google is testing a feature leveraging Gemini Nano to deliver real-time alerts during phone calls, identifying conversation patterns commonly associated with scams. For instance, if a caller purporting to be a "bank representative" urges fund transfers, requests payment via gift cards, or solicits sensitive information like card PINs or passwords which are unusual requests for legitimate banks, the feature will promptly notify users. This protection operates entirely on-device, ensuring the privacy of user conversations. Further details about this feature will be shared later this year.

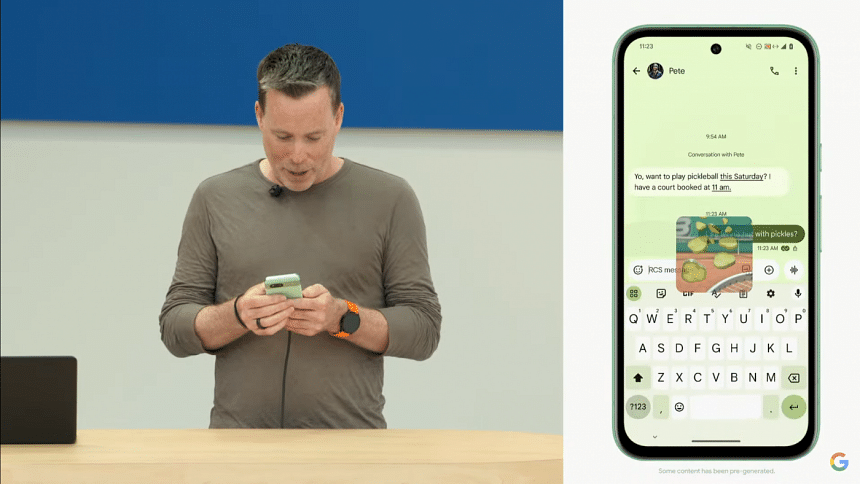

Gemini on Android

Google's Gemini is set to deeply integrate with Android's mobile operating system and Google's suite of apps. This enhanced integration will allow users to seamlessly drag and drop AI-generated images into Gmail, Google Messages, and other applications. Moreover, YouTube users will soon access a new feature called "Ask this video" enabling them to retrieve specific information directly from within YouTube videos.

Responsible use of AI

Looking ahead, Google envisions AI agents capable of reasoning and planning across various tasks. The potential applications range from streamlining online shopping to aiding individuals in navigating new environments. Google has shared about their AI-assisted red teaming, SynthID and collaborations regarding improving models like Gemini and protection against their misuse.

The announcements made at Google I/O 2024 shows how Google is emphasising on the responsible use of AI alongside data privacy.

For all latest news, follow The Daily Star's Google News channel.

For all latest news, follow The Daily Star's Google News channel.

Comments