NVIDIA unveils GB200 Grace Blackwell, the AI 'superchip'

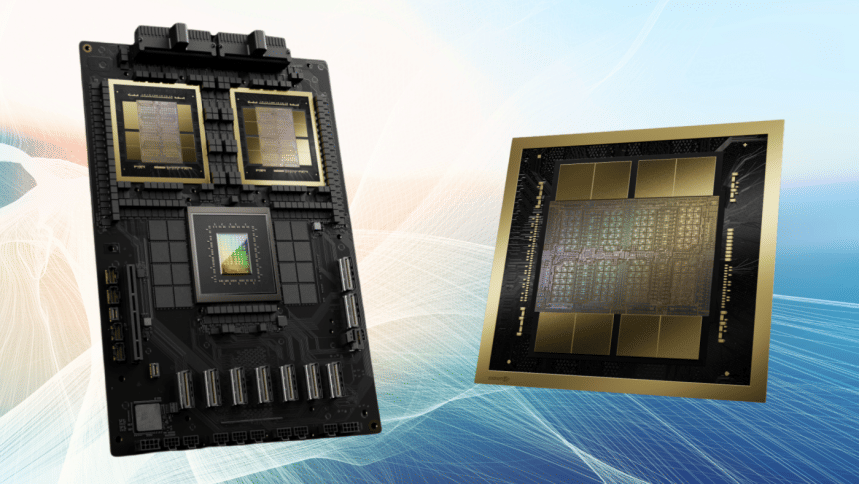

NVIDIA, the multinational tech company, has recently announced the GB200 Grace Blackwell Superchip and the Blackwell B200 GPU, technologies with the potential to significantly advance the field of large-language models (LLMs) for generative AI.

By combining two NVIDIA B200 Tensor Core GPUs and an NVIDIA Grace CPU, the GB200 'superchip' boasts ultra-low-power NVLink chip-to-chip interconnection with a transfer rate of 900 GB/s, says an official press release by NVIDIA.

For even greater performance, GB200-powered systems can be linked with NVIDIA's newly announced Quantum-X800 InfiniBand and Spectrum-X800 Ethernet platforms, reaching networking speeds of up to 800 GB/s, add the press release. This ensures smooth data flow across multiple GB200 units.

NVIDIA adds that the true potential of GB200 is unleashed in the NVIDIA GB200 NVL72 system. This multi-node behemoth utilises a liquid-cooling design to tackle the most demanding AI workloads, connecting 36 Grace CPUs and 72 Blackwell GPUs in a rack-scale design.

The resulting GB200 NVL72 system can deliver a 30 times performance boost compared to previous generation NVIDIA H100 Tensor Core GPUs for large language model (LLM) inference tasks. This translates to not only faster processing but also significant cost and energy savings, with reductions of up to 25 times, says the tech giant.

With a whopping 1.4 exaflops of AI performance and a massive 30 TB of high-speed memory, the GB200 NVL72 acts as a single, powerful GPU. This innovation forms the building block for the next generation of DGX SuperPODs, high-performance computing systems specifically designed for AI applications.

The arrival of the GB200 Grace Blackwell and its supporting technologies marks a significant advancement in AI processing capabilities. Experts believe that with its top-notch performance and efficiency, it has the potential to revolutionise various AI fields, from creating more powerful LLMs to advancing the realms of scientific research and drug discovery.

For all latest news, follow The Daily Star's Google News channel.

For all latest news, follow The Daily Star's Google News channel.

Comments