Google apologises for racist blunder

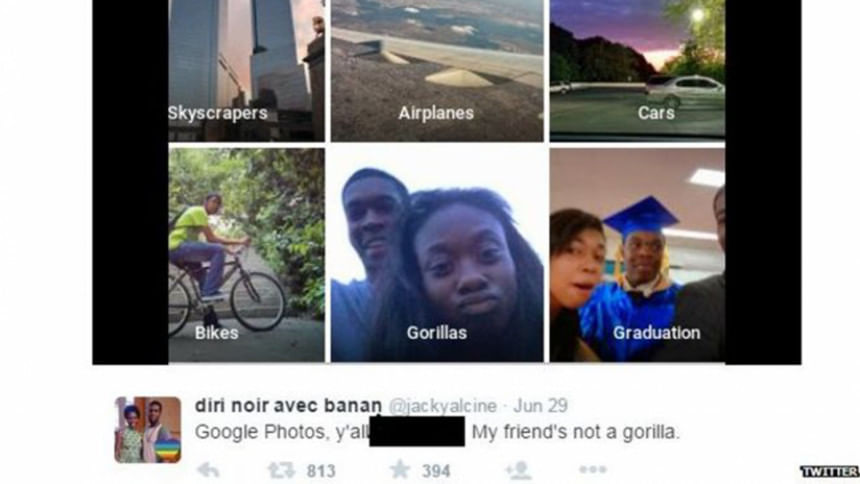

Google says it is "appalled" that its new Photos app mistakenly labelled a black couple as being "gorillas".

Its product automatically tags uploaded pictures using its own artificial intelligence software.

The error was brought to its attention by a New York-based software developer who was one of the people pictured in the photos involved.

Google was later criticised on social media because of the label's racist connotations.

"This is 100% not OK," acknowledged Google executive Yonatan Zunger after being contacted by Jacky Alcine via Twitter.

"[It was] high on my list of bugs you 'never' want to see happen."

Zunger said Google had already taken steps to avoid others experiencing a similar mistake.

He added it was "also working on longer-term fixes around both linguistics - words to be careful about in photos of people - and image recognition itself - eg better recognition of dark-skinned faces".

This is not the first time Google Photos has mislabelled one species as another.

The news site iTech Post noted that the app was tagging pictures of dogs as horses in May.

Users are able to remove badly identified photo classifications within the app, which should help it improve its accuracy over time - a technology known as machine learning.

However, Google has acknowledged the sensitivity of the latest mistake.

"We're appalled and genuinely sorry that this happened," a spokeswoman told the BBC.

"We are taking immediate action to prevent this type of result from appearing.

"There is still clearly a lot of work to do with automatic image labelling, and we're looking at how we can prevent these types of mistakes from happening in the future."

But Alcine told the BBC that he still had concerns.

"I do have a few questions, like what kind of images and people were used in their initial priming that led to results like these," he said.

"[Google has] mentioned a more intensified search into getting person of colour candidates through the door, but only time will tell if that'll happen and help correct the image Silicon Valley companies have with intersectional diversity - the act of unifying multiple fronts of disadvantaged people so that their voices are heard and not muted."

For all latest news, follow The Daily Star's Google News channel.

For all latest news, follow The Daily Star's Google News channel.

Comments