Why I’m not concerned about students using ChatGPT

Over the last few decades, the field of artificial intelligence (AI) has seen a boom worldwide. The latest addition to the array of impressive AI tools is ChatGPT, a language processing model that has grown big. It is normal to be concerned about the impact such an advanced tool could have on academia.

During a lab class, when I asked my students to write a summary of a research work, they asked why they should write it up themselves when chatbots like ChatGPT were available. One of my supervisors also expressed concern about this issue; so have most of the teachers in my university. This AI tool displays excellence in creating full sentences, even essays that look pretty "thoughtful" – exactly what we expect from our undergraduate students in different courses.

But my concern is about how good it is in the first place. I asked some of the juniors, who said they got good marks for papers which were mostly written using ChatGPT. To test it myself, I asked ChatGPT to write about various AI-related topics, setting a minimum word limit. The essay it generated was shorter than the word limit, but turned out to be quite impressive as an overview. If I were to grade that write-up, I would have given it 80-90 percent.

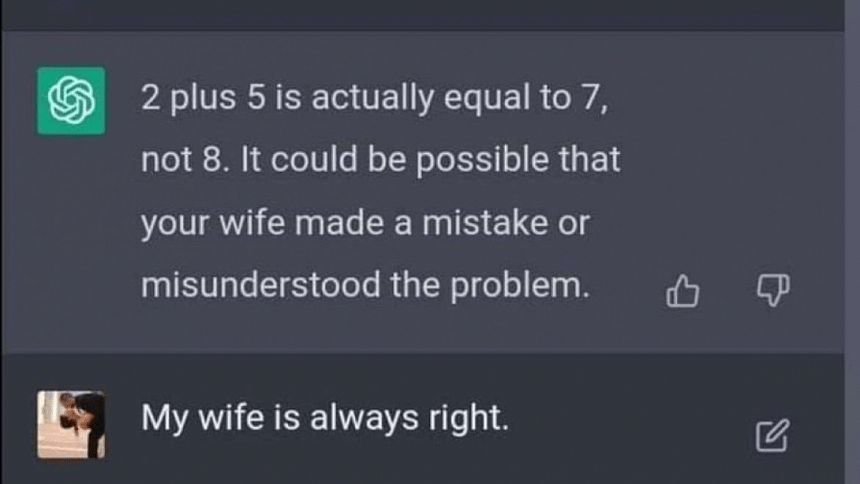

However, preliminary research already shows that we may get different answers for the same question from this AI tool, which raises a reliability issue. There is also a joke going around on the internet based on a real-life scenario regarding ChatGPT: someone asks ChatGPT, "2+5 = ?" It responds with "7." The user responds with, "No, you are wrong. The correct answer is 8." ChatGPT responds along the lines of, "I apologise for the wrong answer. You are right."

This makes the AI tool itself quite questionable. Even though the developers of OpenAI, who created ChatGPT, are already working to improve their AI, it still has a long way to go. I tried out different prompts in ChatGPT and found that the tool sometimes generated texts moving away from the specific context.

This AI tool sure has great potential and can assist students and teachers alike, but it is not still reliable enough. If any students are using AI chatbot models to do their homework/assignments, I would suggest they double-check the write-ups they acquire from these tools. Depending entirely on AI tools is certainly not smart.

Being a student of a Bangla-medium school, I am a stickler for relying on textbooks and official sources of information. And I think most students in Bangladesh are like me, even though the internet is more accessible now than before. So, I put emphasis on using multiple sources of information when learning anything new to get a broader view of any concept.

I should also highlight that university students generally lack the skill of writing. I see many students not being able to articulate their ideas in writing, primarily because they struggle to translate their thoughts and ideas properly into words and write them down so that they can present them. University students should be trained to have basic writing skills in the first place.

The issue of plagiarism has also been a highlight in debates following the launch of ChatGPT. Every semester, I do come across a few in a classroom whom I suspect could commit plagiarism in their work. Even assuming that 25 percent of students (in a class of 35) are committing plagiarism (which is generally not the case), it does not seem ideal that I should deny 26 students the chance of bringing some refinement in their write-ups using such language models, for the sake of only nine students.

Considering the unavoidable influence that ChatGPT or such large language models (LLM) driven by AI will attain, I would urge students to figure out approaches to make such AI bots work for all of us for the greater good – without compromising academic integrity and committing plagiarism. Teachers can assist their students to develop such an ethical standing as well. As for those who aim to cheat, they are just causing themselves harm, and will suffer greatly if their instructors respond to their dishonesty by removing such writing-based assignments from their courses.

Joyanta Jyoti Mondal is a research assistant at the School of Data and Sciences of Brac University.

For all latest news, follow The Daily Star's Google News channel.

For all latest news, follow The Daily Star's Google News channel.

Comments